In my previous post I wrote about 4 ways to load data onto Hadoop on Azure.

After publishing the post I started to look into a fifth way – importing data from the Windows Azure Datamarket

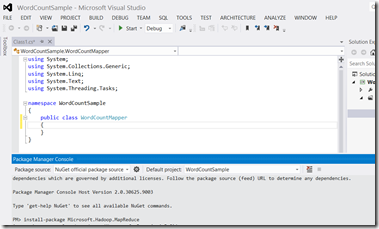

Hadoop on Azure includes the ability to provide it with credentials to the market place, a query to run and the name of a hive table to create and will do the rest – query the data through the marketplace, store it on HDFS and create a hive table on top.

To configure that – click the ‘Manage Cluster’ tile on the Hadoop on Azure homepage

and then click on the ‘DataMarket’ button

To get to this screen, in which you can provide all the details

You can get (and test) the query from the marketplace’s query builder tool –

After entering all the details and clicking the ‘import data’ button a job will get started, and when completed you will have a hive table with the dataset (you can leave this screen and check back on the job history later, naturally it is all done asynchronously).

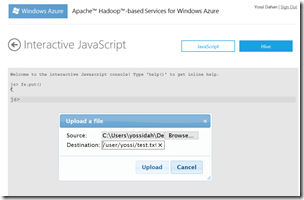

The best way to validate that (after of course making sure the job had completed successfully through the job history screen) is to use the hive interactive console – in the Hadoop on Azure homepage click the ‘Interactive Console’ tile and be sure to click the ‘Hive’ button on the top left.

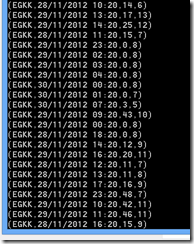

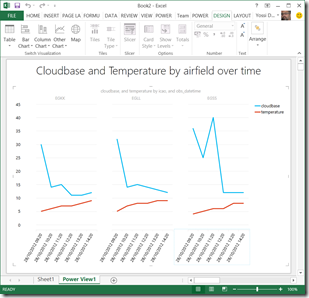

It will take a few seconds for the tables dropdown to get populated, so bare with it, but once it has you should be able to see the table name you’ve entered in the list, and if you do, you should be able to run QL queries on it through the interactive console, I (eventually, see note below) loaded data from the ThreeHourlyForecast table for Heathrow from the met office’s data feed and so I could execute a query such as ‘select * from lhrmetdata’ and see the results displayed in the console. result.

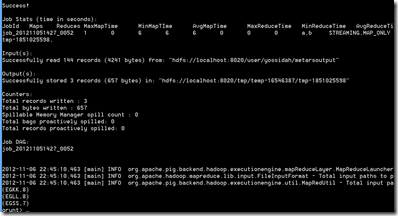

However – with this particular data set I did bump into a bit of a glitch and what is probably a bug in this preview release – when I ran the import data job, the job info page reported the ‘Completed Successfully’ status –

…but the dropdown in the hive interactive console never showed my table, nor did running the ‘show tables’ command.

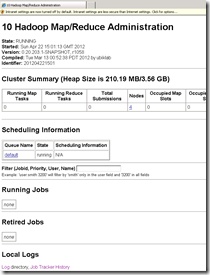

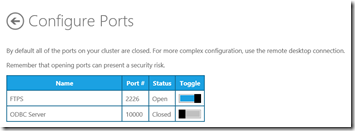

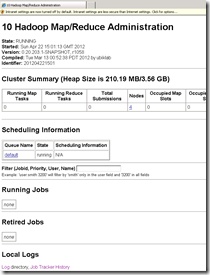

I poked around the file system on the server (by RDP-ing into it and using the web interface as well as the command line, and I could see the data feed had been downloaded successfully, so I could not figure out what had gone wrong, until ‘jpposthuma’ on HadoopOnAzureCTP Yahoo group provided a spot on advice – to check the downloader.exe log file and so – I’ve opened the MapReduce web console on the server (after RDP-ing into it) and I clicked the log link at the bottom left –

The downloader.exe log file was the first listed in the directory listing

I downloaded the file and opened it in notepad (it is not viewed well in the browser), and the problem became clear immediately (I’ve highlighted the key area) –

2012-04-22 17:28:00,645 INFO Microsoft.Hadoop.DataLoader.DataLoaderProgram: Start DataLoader …

2012-04-22 17:28:00,708 INFO Microsoft.Hadoop.DataLoader.DataLoaderProgram: Overwriting flag [-o] is not set

2012-04-22 17:28:00,739 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: Begin transfer

2012-04-22 17:28:00,739 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: Transferring schema

2012-04-22 17:28:00,770 INFO Microsoft.Hadoop.DataLoader.ODataSource: Begin exporting schema

2012-04-22 17:28:00,801 INFO Microsoft.Hadoop.DataLoader.ODataSource: build http request to data market: https://api.datamarket.azure.com/Data.ashx/DataGovUK/MetOfficeWeatherOpenData/ThreeHourlyForecast?$top=100

2012-04-22 17:28:04,130 INFO Microsoft.Hadoop.DataLoader.ODataSource: End exporting schema

2012-04-22 17:28:04,130 INFO Microsoft.Hadoop.DataLoader.FtpChannel: Begin pushing schema

2012-04-22 17:28:05,708 INFO Microsoft.Hadoop.DataLoader.FtpChannel: Ftp response code: ClosingData

2012-04-22 17:28:05,708 INFO Microsoft.Hadoop.DataLoader.FtpChannel: End pushing schema

2012-04-22 17:28:05,708 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: Transferring data

2012-04-22 17:28:05,723 INFO Microsoft.Hadoop.DataLoader.FtpChannel: Begin pushing data

2012-04-22 17:28:05,786 INFO Microsoft.Hadoop.DataLoader.ODataSource: Begin exporting data

2012-04-22 17:28:05,786 INFO Microsoft.Hadoop.DataLoader.ODataSource: exporting page #0

2012-04-22 17:28:05,786 INFO Microsoft.Hadoop.DataLoader.ODataSource: build http request to data market: https://api.datamarket.azure.com/Data.ashx/DataGovUK/MetOfficeWeatherOpenData/ThreeHourlyForecast?$top=100

2012-04-22 17:28:06,286 INFO Microsoft.Hadoop.DataLoader.ODataSource: End exporting data. Total 100 rows exported

2012-04-22 17:28:06,395 INFO Microsoft.Hadoop.DataLoader.FtpChannel: Ftp response code: ClosingData

2012-04-22 17:28:06,395 INFO Microsoft.Hadoop.DataLoader.FtpChannel: End pushing data. Total 100 rows pushed

2012-04-22 17:28:06,395 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: End transfer

2012-04-22 17:28:06,411 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: Begin creating Hive table

2012-04-22 17:28:06,442 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: Begin HiveCli execution

2012-04-22 17:28:06,442 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: cmd = c:\apps\dist\bin\hive.cmd

2012-04-22 17:28:06,442 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: params = -v -f c:\apps\dist\logs\userlogs\hiveql\93e6a3e5-4914-4f78-8731-6bd9f2dcb94d.hql

2012-04-22 17:28:07,911 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: [HiveCli stderr] Hive history file=C:\Apps\dist\logs\history/hive_job_log_yossidahan_201204221728_335367629.txt

2012-04-22 17:28:08,333 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: [HiveCli stdout] CREATE EXTERNAL TABLE lhrmetdata ( ID BIGINT,ForecastSiteCode INT,PredictionId STRING,SiteName STRING,Country STRING,Continent STRING,StartTime TINYINT,Day STRING,Date STRING,TimeStep SMALLINT,SignificantWeatherId SMALLINT,ScreenTemperature SMALLINT,WindSpeed SMALLINT,WindDirection TINYINT,WindGust SMALLINT,VisibilityCode STRING,RelativeHumidity SMALLINT,ProbabilityPrecipitation SMALLINT,FeelsLikeTemperature SMALLINT,UVIndex SMALLINT,PredictionTime TINYINT ) COMMENT ‘external table to /uploads/lhrmetdata/lhrmetdata/content.dat created on 2012-04-22T17:28:06.411+00:00’ROW FORMAT DELIMITED FIELDS TERMINATED BY ’01’ LOCATION ‘/uploads/lhrmetdata/lhrmetdata’

2012-04-22 17:28:08,551 ERROR Microsoft.Hadoop.DataLoader.DataLoaderMediator: [HiveCli stderr] FAILED: Parse Error: line 1:163 mismatched input ‘Date’ expecting Identifier near ‘,’ in column specification

2012-04-22 17:28:09,067 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: End HiveCli execution. Return code = 0

2012-04-22 17:28:09,067 INFO Microsoft.Hadoop.DataLoader.DataLoaderMediator: End creating Hive table

2012-04-22 17:28:09,083 INFO Microsoft.Hadoop.DataLoader.DataLoaderProgram: Shutdown DataLoader …

The data feed contained a field named ‘Date’ which is a reserved word and so the parsing of the hive command filed.

However – I now knew I had the feed data stored in HDFS already, and I knew what was wrong, so I could simply execute a slightly modified hive create command changing the column name from Date to TheDate; with the original command provided in the log above this was very easy to figure out –

CREATE EXTERNAL TABLE lhrmetdata ( ID BIGINT,ForecastSiteCode INT,PredictionId STRING,SiteName STRING,Country STRING,Continent STRING,StartTime TINYINT,Day STRING,TheDate STRING,TimeStep SMALLINT,SignificantWeatherId SMALLINT,ScreenTemperature SMALLINT,WindSpeed SMALLINT,WindDirection TINYINT,WindGust SMALLINT,VisibilityCode STRING,RelativeHumidity SMALLINT,ProbabilityPrecipitation SMALLINT,FeelsLikeTemperature SMALLINT,UVIndex SMALLINT,PredictionTime TINYINT ) COMMENT ‘external table to /uploads/lhrmetdata/lhrmetdata/content.dat created on 2012-04-22T17:28:06.411+00:00’ROW FORMAT DELIMITED FIELDS TERMINATED BY ’01’ LOCATION ‘/uploads/lhrmetdata/lhrmetdata’

As expected this command completed successfully and my table now showed in the dropdown list

So – valid reason for failing, was just confusing that the job was reported as successful initially, but I’d expect this to be ironed out before Hadoop on Azure gets released and ultimately – a great way to work with marketplace data!