Last week I got an email from a customer who was surprised to find out that somebody had decided to point a different domain name to their web site (i.e. if there were Contoso.com, somebody pointed Northwind.com at their web site)

We couldn’t quite figure out why would somebody do that, or whether it’s really a problem but it certainly made them feel uncomfortable, and I can see why.

Technically there’s not much one can do to prevent others from doing this, and whilst you can go and complain to the registrar of the rouge domain, this is a hassle and will take some time to sort out, so a technical solution is needed to circumvent that.

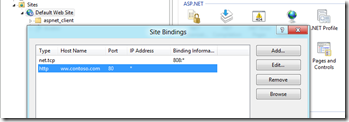

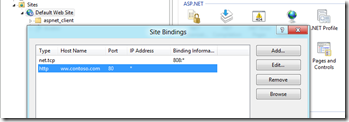

The best approach, as far as I can tell, is to set the host name property in the site bindings in IIS to the correct domain name(s), which would result in IIS rejecting any request carrying a different domain name(s) and, indeed, on-premises, this is what everybody seems to do –

Any request made to the web site using a different domain (easily simulated using the hosts file in C:\Windows\System32\drivers\etc), will result in an HTTP 400 or HTTP 503 errors.

To set the host name on a web role instance declaratively one could use the hostHeader attribute of the binding element in the ServiceDefinition.csdef file – this will instruct the fabric to set the value provided in IIS and, as a result, any request made using a different host name will get rejected.

The problem with setting the host name to the production domain is that it would prevent access to the system whilst on staging – when the URL includes a generated quid – the staging URL is not known at design time as as such cannot be provided in the ServiceDefinitions.csdef file.

The solution is to set the site bindings dynamically from within the deployment, and the easiest way to do that is from the OnStart method of the Role –

public class WebRole : RoleEntryPoint

{

public override bool OnStart()

{

// For information on handling configuration changes

// see the MSDN topic at http://go.microsoft.com/fwlink/?LinkId=166357.

try

{

FixSiteBindings();

}

catch (Exception ex)

{

WriteExceptionToBlobStorage(ex);

}

return base.OnStart();

}

Before I dive into my FixSiteBindings method I should point out that during the testing of this I’ve used the method pointed out by Christian Weyer to log any exception in OnStart to blob storage, which was very handy!

So – when the role start, FixSiteBindings is called, which looks as follows –

void FixSiteBindings()

{

//web site name is the role instance id with the "_Web" postfix (WebSite name in ServiceDefinition.csdef)

string webSiteName = RoleEnvironment.CurrentRoleInstance.Id + "_Web";

using (ServerManager sm = new ServerManager())

{

//find web site

Site site = sm.Sites[webSiteName];

if (site == null)

throw new Exception("Could not find site " + webSiteName);

//find the binding with hostName TBR - this is the one we need to replace

Binding b = site.Bindings.FirstOrDefault(binding => binding.Host == "TBR");

if (b != null)

{

site.Bindings.Remove(b);

//add a binding with the expected domain - (address:port:hostName),protocol

site.Bindings.Add(string.Format(@"{0}:{1}:{2}", b.EndPoint.Address, b.EndPoint.Port, RoleEnvironment.DeploymentId + ".cloudapp.net"), b.Protocol);

sm.CommitChanges();

}

}

}

In this code I use ServerManager (Microsoft.Web.Administration) to manipulate IIS settings and add the necessary bindings, but before I go into the code, let me explain the approach I’ve taken –

Ultimately, when my web site is in production, I need a host header for my domain name. I also need a host header with my staging URL, added dynamically.

As I might be using VIP swap between staging and production, I need to have both all the time because the OnStart code (or any start up tasks) will not run during a VIP swap, so I won’t get another chance to make any changes and besides – it is best to do as little as possible between staging and production to keep the system as stable as possible between environments.

Last – I need to ensure that the default binding, without the host header, does not exist, which would prevent others from pointing other domain names at my deployment.

To achieve all of the above I’ve concluded that the easiest way was to start with a ServiceDefinition.csdef file that defines the two bindings I need, use the domain name needed for production and a place holder, with a pre-determined host name for staging, in my case ‘TBR’ –

<?xml version="1.0" encoding="utf-8"?>

<ServiceDefinition name="HostName" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition">

<WebRole name="MvcWebRole1" vmsize="ExtraSmall">

<Runtime executionContext="elevated"/>

<Sites>

<Site name="Web">

<Bindings>

<Binding name="Endpoint1" endpointName="Endpoint1" hostHeader="yossitest.cloudapp.net"/>

<Binding name="Staging" endpointName="Endpoint1" hostHeader="TBR"/>

</Bindings>

</Site>

</Sites>

<Endpoints>

<InputEndpoint name="Endpoint1" protocol="http" port="80" />

</Endpoints>

<Imports>

<Import moduleName="Diagnostics" />

<Import moduleName="RemoteAccess" />

<Import moduleName="RemoteForwarder" />

</Imports>

</WebRole>

</ServiceDefinition>

When this gets deployed onto Azure I’ve already achieved two of my three requirements – there’s no default binding (as I’ve defined a host name for both endpoints) and I’ve got the production binding fully configured. I also have the beginning of my third requirement as I’ve got a binding for staging, and the known host name makes it easy to find it programmatically, so the last step would be to find that binding and update the host header with the correct value in the role’s OnStart method.

To achieve that I start with figuring out the name of the Web Site in IIS – this will be composed of the current role instance name, with the name of the web site as set in the ServiceDefinition.csdef flie as a postfix – in my case “Web”.

With an instance of the ServiceManager I find the web site by name and then look for a binding with the host name ‘TBR’ – the one I need to update.

I’m ‘updating’ the binding by removing it and adding one in its place, making sure to use the values from the original one for everything but the host name, which keeps the flexibility of setting these through the ServiceDefinition file.

With the old binding removed and the new one added I commit the changes through the ServerManmager and I’m done – the role should now be set correctly allowing access to both production and staging.

One last thing worth pointing out is that for this code to run it must be run in elevated mode, otherwise trying to make any changes to IIS will result with an error due to lacking permissions; this can be achieved by adding the <Runtime executionContext=”elevated”/> element in the relevant role in the ServiceDefinition.csdef file as is shown above.

It is important to note that this only means that the RoleEntryPoint code will run in elevated mode, and rest of the role’s code will run as normal, which is quite important.

I’ve clearly taken a very specific approach to solve a very specific case. I could have, for example, iterated over all the instance endpoints from the RoleEnvironment class and added the relevant bindings from that, which would be needed if the site had more than one endpoint; I’m sure that there are many variations for the solution above, but I hope that it provides a nice and easy solution for most and a good starting point for others.